Amdahl's law in reverse: the wimpy core advantage

Once a chip’s single-core performance lags by more than a factor to two or so behind the higher end of current-generation

commodity processors, making a business case for switching to the wimpy system becomes increasingly difficult.

– Google's Urs Hölzle, in "Brawny

cores still beat wimpy cores, most of the time"

Google sure knows its own business, so they're probably right when they claim that they need high serial performance.

However, different businesses are different, and there are advantages to "wimpy cores" beyond power savings which are omitted

from the "brawny/wimpy" paper and which are worth mentioning.

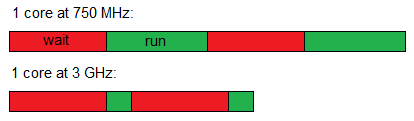

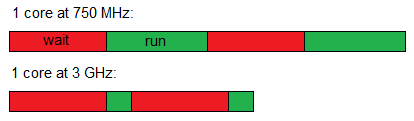

It is a commonplace assumption that a single 3 GHz processor is always better than 4 processors at 750 MHz "because of Amdahl's law". Specifically:

- Some of your tasks can be parallelized to run on 4 cores – these will take the same time to complete on the two

systems.

- Other tasks can only run on one core – these will take 4x more time to complete on the 750 MHz wimpy-core system.

- Some tasks are in-between – say, a task using 2 cores will take 2x more time to complete on the 750 MHz system.

Overall, the 3 GHz system never completes later and sometimes completes much earlier.

However, this assumes that a 3 GHz processor is consistently 4x faster than a 750 MHz processor. This is true in those rare

cherished moments when both processors are running at their peak throughput. It's not true if both are stuck waiting for a

memory request they issued upon a cache miss. For years, memory latency has been lagging behind memory throughput, and the 4x

faster processor does not get a 4x smaller memory latency.

Assuming the latency is the same on the two systems – which is often very close to the truth – and that half

of the time of the 750 MHz system is spent waiting for memory when running a serial task, the 3 GHz CPU will give you a speed-up

of less than 2x.

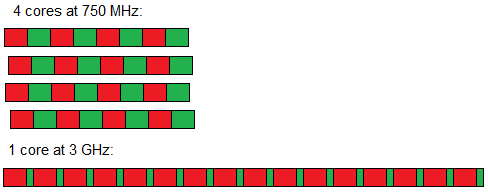

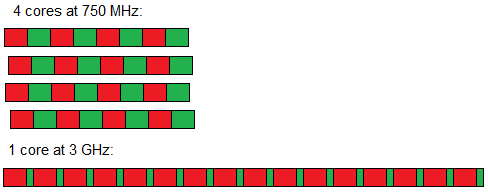

What if the task can run in parallel on 4 cores? Now the 750 MHz system gets a 4x speed-up and completes more than 2x

faster than the 3 GHz system!

(This assumes that the 4 750 MHz cores are not slowed down due to them all accessing the same memory – which, again, is very

close to the truth. Memory bandwidth is usually high enough to serve 4 streams of requests – it's latency

which is the problem. So having several cores waiting in parallel is faster than having one core waiting serially.)

A slow, parallel system outperforming a fast, serial system – Amdahl's law in reverse!

Is memory latency unique?

In a way it isn't – there are many cases where faster systems fail to get to their peak throughput because of some latency or

other. For instance, the cost of mispredicted branches will generally be higher on faster systems.

However, most other latencies of these kinds are much smaller – and are handled rather well by the mechanisms making "brawny"

cores larger and deserving of their name. For example, hardware branch predictors and speculative execution can go a long way in

reducing the ultimate cost of mispredicted branches.

"Brawny" speculative hardware is useful enough to deal with memory latency as well, of course. Today's high-end cores all

have speculative memory prefetching. When you read a contiguous array of memory, the core speculates that you'll keep reading,

fetches data ahead of the currently processed elements, and when time comes to process the next elements, they're already right

there in the cache.

The problem is that this breaks down once you try to use RAM as RAM - a random access memory where you don't go from

index 0 to N but actually "jump around" randomly. (Theoretically this isn't different from branch prediction; in practice,

large, non-contiguous data structures not fitting into caches and "unpredictable" for prefetchers are much more common than

unpredictable branches or overflowing the branch history table.)

Hence we have pledges like this computer architect's kind request to stop using

linked lists:

Why would anyone use a linked-list instead of arrays? I argue that linked lists have become irrelevant in the context of a

modern computer system:

They reduce the benefit of out-of-order execution.

They throw off hardware prefetching.

They reduce DRAM and TLB locality.

They cannot leverage SIMD.

They are harder to send to GPUs.

Note that problems 1, 2 and 4 "disappear" on wimpy cores – that is, such cores don't have out-of-order execution,

hardware prefetchers or SIMD instructions. So a linked list doesn't result in as many missed performance opportunities as it

does on brawny cores.

"Why would anyone use linked lists"? It's not just lists, for starters – it's arrays of pointers as well. "Why array of

pointers" seems exceedingly obvious – you want to keep references instead of values to save space as well as to update something

and actually get the thing updated, not its copy. Also you can have elements of different types – very common with OO code

keeping arrays of base class pointers.

And then using arrays and indexing into them instead of using pointers still leaves you with much of the "access randomness"

problem. A graph edge can be a pointer or an index; and while mallocing individual nodes might result in somewhat poorer

locality than keeping them in one big array, you still bump into problems 1, 2 and 4 for big enough node arrays – because your

accesses won't go from 0 to N.

Of course you could argue that memory indirection is "poor design" in the modern world and that programmers should mangle

their designs until they fit modern hardware's capabilities. But then much of the "brawny vs wimpy" paper's argument is that

brawny cores mean smaller development costs – that you get high performance for little effort. That's no longer true once the

advice becomes to fight every instance of memory indirection.

It's also somewhat ironic from a historical perspective, because in the first place, we got to where we are because of

not wanting to change anything in our software, and still wishing to get performance gains. The whole point of brawny

speculative hardware is, "your old code is fine! Just give me your old binaries and I'll magically speed them up with my latest

and greatest chip!"

The upshot is that you can't have it both ways. Either keep speculating (what, not brawny/brainy enough to guess where the

next pointer is going to point to?), or admit that speculation has reached its limits and you can no longer deliver on the

promises of high serial performance with low development costs.

...is not something I'd want to do.

Amdahl's law has a formal version where you take numbers representing your workload and you get a number quantifying the

benefits a parallel system might give you.

A similar formalization could be done for "reverse Amdahl's law", taking into account, not just limits to parallelization due

to serial bottlenecks (what Amdahl's law does), but also "limits to serialization"/the advantage to parallelization due to

various kinds of latency which is better tolerated by parallel systems.

But I think the point here is that simple formal models fail to capture important factors – not that they should be made more

complex with more input numbers to be pulled out of, erm, let's call it thin air. You just can't sensibly model real programs

and real processors with a reasonably small bunch of numbers.

Speaking of numbers: is time spent waiting actually that

large?

I don't know; depends on the system. Are there theoretically awesome CPUs stuck waiting for various things? Absolutely;

here's that article about 6% CPU utilization in data

servers (it also says that this is viewed as normal by some – as long as RAM and networking resources are utilized. Of

course, by itself the 6% figure doesn't mean that brawny cores aren't better – if those 6% are all short, serial sprints letting

you complete requests quickly, then maybe you need brawny cores. The question is how particular tasks end up being executed and

why.)

Could a "wimpy-core" system improve things for you? It depends. I'm just pointing out why it could.

From big.LITTLE to LITTLE.big, or something

There's a trend of putting a single wimpy core alongside several brawny ones, the wimpy core being responsible for tasks

where energy consumption is important, and the brawny ones being used when speed is required.

An interesting alternative is to put one brawny core and many wimpy ones – the brawny core could run the serial parts and the

wimpy cores could run the parallel parts. If the task is serial, then a brawny core can only be better – perhaps a lot better,

perhaps a little better, but better. If the task is parallel, many wimpy cores might be better than few brawny ones.

(I think both use cases fall under ARM's creatively capitalized "big.LITTLE concept"; if the latter case doesn't, perhaps

LITTLE.big could be a catchy name for this – or we could try other capitalization options.)

Hyperthreading/Barrel threading/SIMT

...is also something that helps hide latency using coarse-grain, thread-level parallelism; it doesn't have to be "whole

cores".

Parallelizing imperative programs

...is/can be much easier and safer than is commonly believed. But that's a subject for a separate series of posts.

Summary

- Faster processors are exactly as slow as slower processors when they're stalled – say, because of waiting for memory;

- Many slow processors are actually faster than few fast ones when stalled, because they're waiting in parallel;

- All this on top of area & power savings of many wimpy cores compared to few brawny ones.

You didn't explicitly say it but GPUs are prime examples of when

wimpy wins.

@Stephen – or, GPUs are a prime example where becoming *less* wimpy

wins. Shader instruction sets have been progressing toward less

wimpiness, greater generality.

@Aaron

Less wimpy than previous gpu cores certainly, but still far more so than

modern x86 chips. I guess there's an optimal amount of wimp/brawn for a

given task, and currently gpu's are on the wimp side...

Sun tried this strategy with Niagra based UltraSPARC CPUs I believe,

arguing that having 64 logical threads at 1Ghz resulted in more

consistent latency, so that 5% of your web requests don't get insanely

high latency in response on a massively oversubscribed system. This

strategy didn't get a whole lot of industry uptake, although I can't say

if it was due to the strategy itself or due to aversion to SPARC /

Solaris / etc.

This argument is predicated on the notion that the workload can be

naturally parallelized. This may not be true: for example, consider that

most financial quote data is represented as a stream of ordered events

which alter the state of the "world". Also, workloads which _can_ be

parallelized must be done in a way that does not introduce false

sharing, which is only as easy as the problem domain and implementation

language allow. Your assertion that parallelism is easier to achieve

than most people believe sounds... well... tough to swallow. Outside of

embarassingly parallel workloads, adding parallelism adds another

dimension to analyze. If people are already skipping out on

memory-hierarchy optimizations, how can we expect that they'll suddenly

pick up the ball on parallelism optimizations?

Regarding hardware prefetching: If an object is larger than a single

cache-line, then brawny prefetching can still be useful for

latency-hiding. If a series of objects was allocated in sequence (or

nearly so), then it is likely that they will appear contiguously in

memory, and again brawny prefetching can help. Memory arenas and pooled

allocations can help in this regard, by keeping your virtual address

space more compact than your pointer-chains would suggest.

Regarding branch misprediction: Intel learned their lesson from the

P4: deep pipelines make branch misprediction expensive. The newest Intel

hardware has a much lower cost to misprediction. Furthermore, brawny

hardware with conditional instructions can eliminate the misprediction

entirely. I don't see an advantage to wimpy hardware here, though I do

see a benefit for otherwise-wimpy hardware that is just brawny enough to

provide a set of conditional instructions.

Regarding SIMD absence from wimpy cores: Effective use of SIMD should

be providing a speedup linear in the size of the SIMD "word". Replacing

a 16-way 8-bit integer calculation with 16 separate threads all doing

8-bit integer calculations sounds like a pretty raw deal. If would only

make sense if the computation wasn't suitable (or was borderline

suitable) for SIMD in the first place.

Sun Niagara processors had extremely slow cores when doing single

threaded tasks (4-8x slower than x86 processor clocked at ~2GHz). That

might be the reason, why it didn't catch up in Industry, where still

from time to time you need decent single-threaded performance.

There's a name for "reverse Amdahl's law" – it is Little's law:

http://en.wikipedia.org/wiki/Little's_law

needed parallelism = latency * throughput

This is nicely explained in the contect of GPU processing here:

http://www.cs.berkeley.edu/~volkov/volkov10-GTC.pdf

@Alex

I think that definitely brings up the additional point on wimpy

performance gains where the number of independent tasks greatly exceeds

the complexity of each task.

"Your assertion that parallelism is easier to achieve than most

people believe sounds... well... tough to swallow."

Just wait a bit for the upcoming posts :) (As to embarrassingly

parallel... depends how easily one is embarrassed :)

@Giga: thanks for the link! Interesting stuff from there: on one

hand, ILP is sometimes a better way to hide latency on GPUs than TLP; on

the other hand, ILP is limited (by 4 in their experiments on GPUs), the

reason presumably being that keeping multiple independent instructions

in flight uses up rather limited hardware scheduling resources. (Of

course TLP is limited as well – by the number of available

registers/register sets; I think it's more easily scalable though –

perhaps that's the reason for the "lies" by CUDA manuals that they site

– that is, NVIDIA is OK with committing to high occupancy being

beneficial on their machines but not to ILP, an example of that being

the reduction of #registers/thread in Fermi that they call a

"regression").

This is Amdahl's law applied to processor speed.

From Wikipedia:

"Amdahl's law [...] is used to find the maximum expected improvement to

an overall system when only part of the system is improved."

In this case, the brawnier core has increased processing speed, but

the same memory latency, so the speedup for your specific task is 1/(0.5

+ 0.5/4) = 1.6

Bringing in multi-core just confuses the point, IMO. You now know

that the bigger core is 1.6 times faster for your workload, so if you

presuppose that the tasks are parallelisable, then obviously 4 > 1.6.

The reason the big core is 1.6 times faster does not matter. The small

cores are not just "waiting in parallel", they are doing everything in

parallel.

(Then again, if the loads are 4-way parallelisable, why did the big

core have to do them in sequence? The programmer could at least have

thrown in some preloads!)

This is Amdahl's law applied to processor speed.

From Wikipedia:

"Amdahl's law [...] is used to find the maximum expected improvement to

an overall system when only part of the system is improved."

In this case, the brawnier core has increased processing speed, but

the same memory latency, so the speedup for your specific task is 1/(0.5

+ 0.5/4) = 1.6

Bringing in multi-core just confuses the point, IMO. You now know

that the bigger core is 1.6 times faster for your workload, so if you

presuppose that the tasks are parallelisable, then obviously 4 > 1.6.

The reason the big core is 1.6 times faster does not matter. The small

cores are not just "waiting in parallel", they are doing everything in

parallel.

(Then again, if the loads are 4-way parallelisable, why did the big

core have to do them in sequence? The programmer could at least have

thrown in some preloads!)

"embarrassingly parallel" has a distinct technical meaning, it's when

you get a slight superlinear speedup, typically because of caches. (if

you get a bigger speed up than, say 10%, your serial version is probably

screwed up.)

Maybe another counter point is, I think that the 'brawnier' chips

have also much brawnier level 2/3 caches, so in the end it is rather

rare/hypothetical case where you can can get advantage using many

wimpier chips. Also when you have 4 independent core accessing memory in

parallel you do tend to have more randomness than one core, specially in

the case where the original access pattern was not that random. Now

naturally when your performance is I/O bound such as in servers, you

need to have some task parallelism in the software layer (having many

independent threads doing asynchronous i/o) but that may be implemented

quite efficiently by a single hardware core (upto a point obviously).

Also the failure of traditional hyper threading, (which may be an

example of trying to wait in parallel in order to hide latency) to get a

marked speedup in real life also suggests there is limited utility in

this direction.

The failure of HT may point to software not being written to exploit

HT; normally hyperthreads are used to run two separate processes with

zero data sharing, and there's not much multi-threaded, single-process

software out there.

As to L2/L3 – you pay quite a penalty for missing L1 and hitting

these instead; better than accessing DRAM of course but still quite a

penalty, so the argument still applies to an extent.

You and I have a lot to discuss about getting speedups from HT in

real life :)

Even if software is written to exploit HT, there are still some

limitations. Intel's software optimization manuals go into this in quite

some detail, but they are extremely dense material. Some of the basic

limitations:

- Newer hardware uses a "micro-op cache" which caches pre-decoded

instruction. If HT is diabled, every CPU gets double the uop cache. A HT

pair of CPUs will not compete for this cache; instead, each gets half of

the cache available.

- HT CPUs still share instruction decoding, which is cited frequently in

the manual as a common bottleneck. So much software will see additional

latency due to instruction decoding. It's not simply a matter of

optimizing the software to run on HT hardware: instruction decoding can

be a bottleneck even without the alternating HT dispatch.

Both of these limitations come with the fact that HT is designed to

double-up on some pieces of hardware (execution units are easy to

parallelize, as are registers) and share other pieces of hardware

(instruction decode, caches). Right off the bat, this means that

efficient HT use is necessarily specialized. For high-performance

software, it will remain a niche because IMHO, there is very little

benefit to try to optimize software for such a specific arrangement

(where shared L1/L2 caches fit into the problem domain).

Let's put it this way: HT is better for worker threads sharing code

and some of the data than for unrelated tasks.

Anyway, I'm not recommending HT for any particular case, just

pointing out one nice thing about it.

Fantastic post, Yossi. Thank you.

Glad you like it; I should add that there's a lot of weight in all

the counter-arguments people brought up in the comments...

@Jeremiah: Sun tried this strategy with Niagra based UltraSPARC CPUs

I believe, arguing that having 64 logical threads at 1Ghz resulted in

more consistent latency, so that 5% of your web requests don't get

insanely high latency in response on a massively oversubscribed

system.

This is true, but it works for more than one type of workload. For

example, last I looked at the benchmarks, a t2000 was an order of

magnitude faster than a contemporary dual Opteron server on MySQL

workloads once a sufficient number of threads were engaged. So, clearly,

if you have workloads that match the architecture, you get big wins (the

fact that people ran minimally parallel benchmarks and declared Niagara

a dud notwithstanding).

@Jeremiah: This strategy didn't get a whole lot of industry uptake,

although I can't say if it was due to the strategy itself or due to

aversion to SPARC / Solaris / etc.

I'm not sure if I agree with that. Niagra is on it's 5th major

architecture refresh. Certainly AMD Bulldozer/Piledriver is a wimpy-core

architecture (though it's success is yet to be proven). More esoteric

things such as Parallella are wimpy-core, and as noted GPUs arguably

are. So I think there's significant industry uptake.

Amdahl's law means more than just threaded vs non threaded

work.

Its total_time= Execution_time_affected / improvement + time_unaffected

.

And the first example I saw about it was about trick question on how

much should improve multiplier if it takes 80% of execution time and you

want to run the program five times faster.

Now the Amdah's law applied to process. Transistor delay has been

exponentially reduced while wire delay being kept constant. ->at some

point transistor delay doesn't apply but at first total delay reduction

of cycle time is exponential if transistor delay dominates.

Then same thing about adding another ALU that gets rarely used and so

on. Amdahl's law totally killed single threaded improvement almost

decade ago, and we are going for 10% yearly improvements nowadays.

Post a comment